Docs / Overview

Overview

What is Evaligo?

Evaligo helps teams ship reliable AI features by centralizing experiments, evaluations, and production tracing in one workspace. Iterate fast, catch regressions, and keep quality high as prompts and models evolve.

A consistent workflow creates a shared source of truth for product, data, and engineering teams. This reduces friction from scattered scripts, dashboards, and documents.

Built-in evaluators and dashboards get you started quickly, while custom evaluators and flexible integrations let you tailor Evaligo to your domain and requirements.

Everything is designed to move from experimentation to production smoothly, with CI gates and tracing that closes the feedback loop.

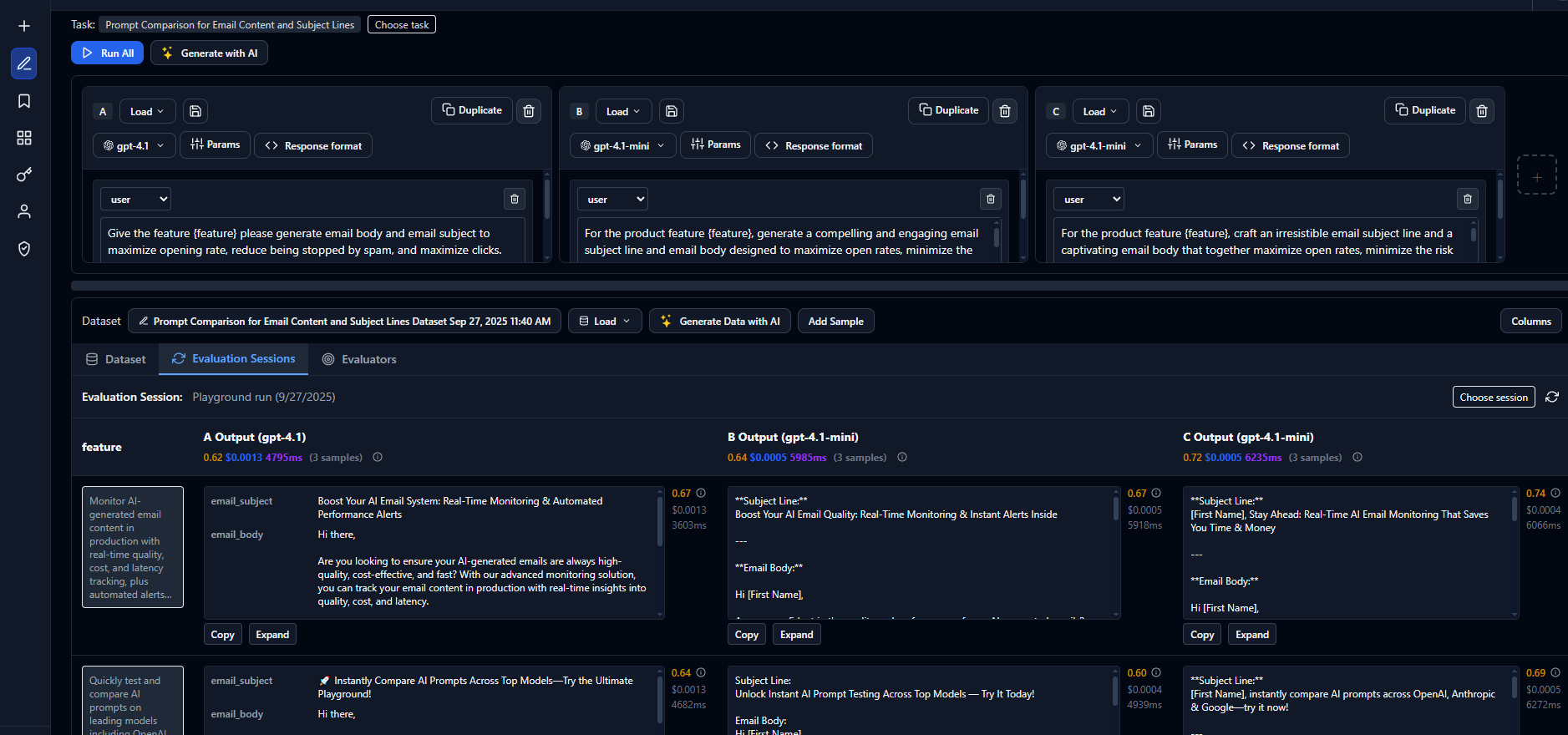

Playground: Interactive Prompt & Model Evaluation

Core building blocks

- 1

Datasets Curated inputs and expected behaviors for objective comparisons.

- 2

Experiments Run prompt/model variants across datasets and review metrics.

- 3

Evaluations Score quality with built-in or custom evaluators and judge models.

- 4

Tracing Observe production sessions, costs, and outliers to close the feedback loop.

Working with datasets

Datasets form the foundation of reliable AI evaluation. Start with a curated set of inputs that represent your real-world use cases.

Running experiments

Compare different prompts, models, and parameters side-by-side to understand what works best for your specific use case.

Getting started workflow

Most teams follow this progression when adopting Evaligo:

Phase 1: Foundation

Set up your first project and import an initial dataset to establish your evaluation baseline.

Phase 2: Experimentation

Run experiments to compare different approaches and identify the best performing variants.

Phase 3: Production

Deploy with confidence using tracing to monitor real-world performance and catch regressions early.