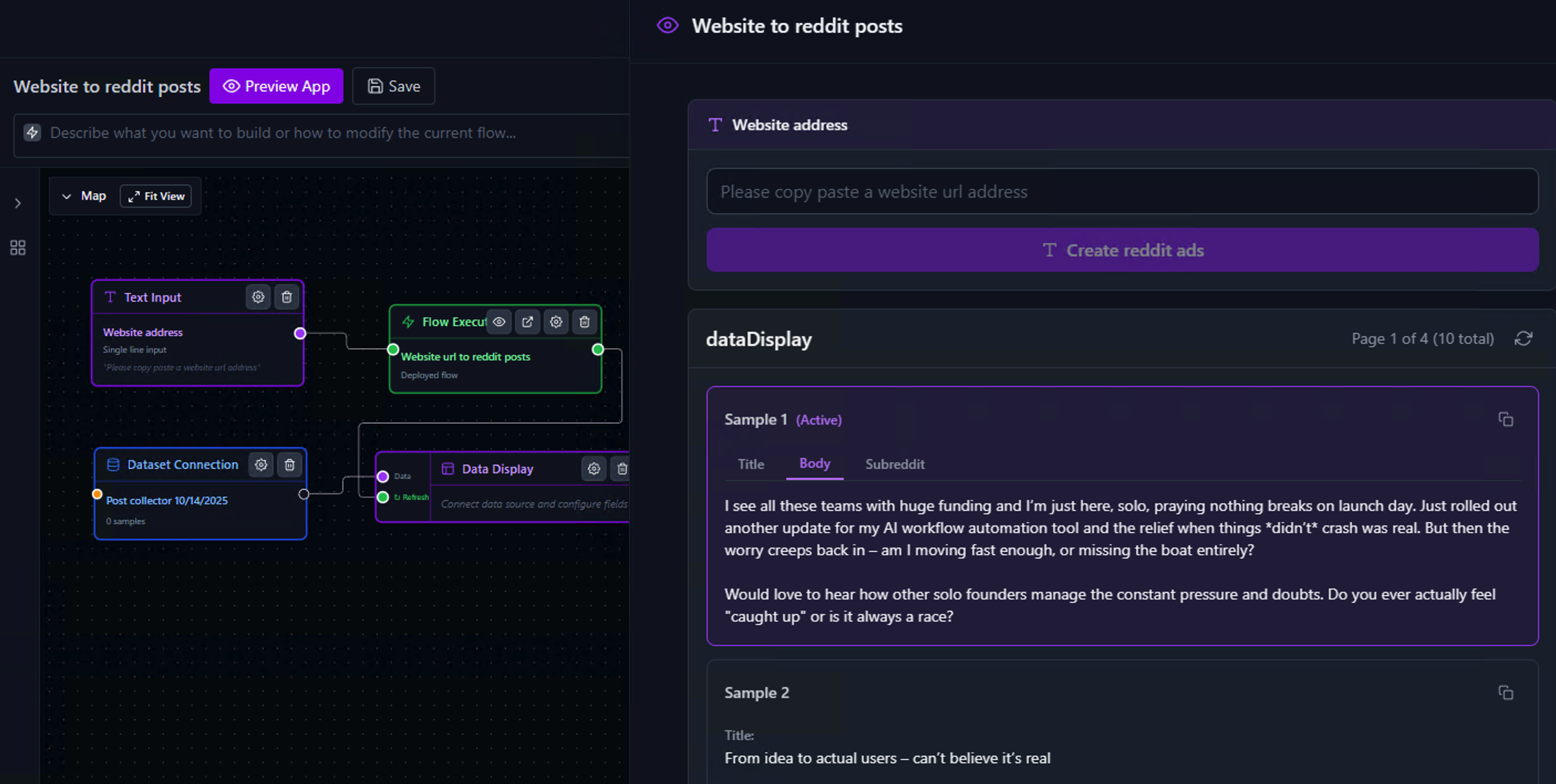

Website to Reddit Posts Automation

Build an automation that crawls any website and generates 15 authentic, community-friendly Reddit posts for founder subreddits. Perfect for sharing product updates without manual content adaptation.

Two-Layer Architecture

Flow Playground

The crawling and content generation engine. Analyzes websites, extracts key pages, and generates Reddit posts.

App Playground

The user interface. Simple URL input that displays generated posts in a clean gallery.

Part 1: The Website Crawler & Reddit Generator Flow

This flow crawls a website, identifies the 3 most marketing-relevant pages, and generates 5 unique Reddit posts for each page (15 total). Let's see the complete flow first.

Complete Flow (Left to Right)

Breaking Down Each Node

Receives website URL from the app. Starting point for the entire process.

Crawls the website and maps its structure with page metadata.

- • Depth 1 - Homepage + 1 level

- • Max 3 pages - Fast results

- • Same site only - Stay in domain

Tree structure of all URLs with metadata.

AI analyzes the crawl tree and picks the 3 most marketing-relevant pages.

Array of 3 URLs with summaries.

Splits 3 URLs into separate threads. Processes all pages simultaneously.

Parallel: ~20s total→ 3x faster!

Fetch HTML → Extract clean text. Removes tags, scripts, styles.

- • Raw HTML confuses AI

- • Clean text = better posts

- • Lower token costs

500-2000 words of clean text per page.

Creates 5 authentic Reddit posts per page. Runs 3x in parallel = 15 total posts.

html Clean texturl Source pageCombines 3 arrays into one. [[5], [5], [5]] → [15 posts]

Iterator needs a flat array, not nested ones.

Loops through all 15 posts one by one.

Dataset writes are fast (~50ms). 15 writes = 1 second. No need to parallelize.

Saves all 15 posts to cloud storage.

- • Display in App UI gallery

- • Post library for reference

- • Filter by subreddit

- • History of all content

Input (URL) → Crawler (map site) → Extract URLs (top 3 pages) → Split (3 threads) → Fetch + Extract (per page) → Generate Posts (5 per page, 15 total) → Flatten → Iterator → Dataset (save all posts)

Part 2: The App Playground (User Interface)

Now that we have the flow built, let's create the simple UI that lets users input a URL and view generated posts.

Complete App Structure

How It Works Together:

- 1. Paste URL: "https://myproduct.com"

- 2. Click "Create reddit posts" button

- 3. Wait ~60 seconds (progress indicator shows)

- 4. View 15 generated Reddit posts

- 5. Copy posts to share in r/entrepreneur, r/SideProject, etc.

Deployment

REST API

Deploy as an API endpoint

Embed Widget

Embed the App UI in your website

Ready to Automate Your Reddit Presence?

Start building with Evaligo's visual workflow builder. No coding required.